Share the Fetching

18 of 20start

|

|||

| Layering the Indexing System | Sites are Different | ||

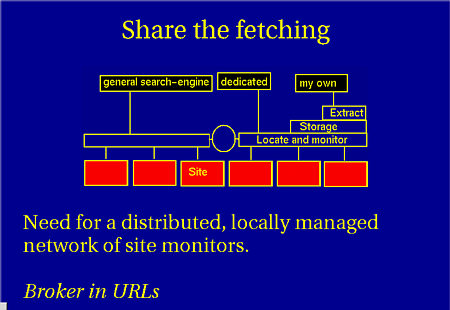

By creating this network, we remove the bottom level of functionality from the search-engines, what makes them much easier and cheaper to build, and with more accurate data. The sites do not need to install extra software, because from their point of view, nothing changes.

Search-engines ask the monitoring network questions like: "all sites in Dutch", "all pages from this list of universities", "all pages containing word Gigabit", or "all urls".

We can even add more layers of the search-engine to the network, for instance generalized extraction software: modules which can assist a spider to build correct indexes. Examples for this are modules to translate the different document-formats to plain text, and software to extract nouns and verbs from text. The second paper describes more possibilities.

Mark A.C.J. Overmeer, AT Computing bv, 1999.